Switch-API Python Package (switch-api · PyPI)

Overview

A Python package for interacting with the Switch Automation platform to retrieve and ingest data as well as register and deploy Tasks that automate processes to ingest, transfer and/or analyze data.

Installing the Package

The switch-api Python Package is distributed via pypi.org and can be found at: https://pypi.org/project/switch-api/

The package can be installed with the following pip command:

Importing the Package

When importing the switch-api package, we typically use the alias 'sw' as demonstrated below:

Initializing Authorization

Authorization is managed by initializing an api_inputs object within your Python script, which upon execution will open a login page within your web browser where you log in with your Switch Platform credentials, and then those api_inputs are passed as a parameter to each function within the Switch API, thus providing the authorization. Deployed Tasks within Task Insights will have valid api_inputs passed to the Task whenever it is triggered.

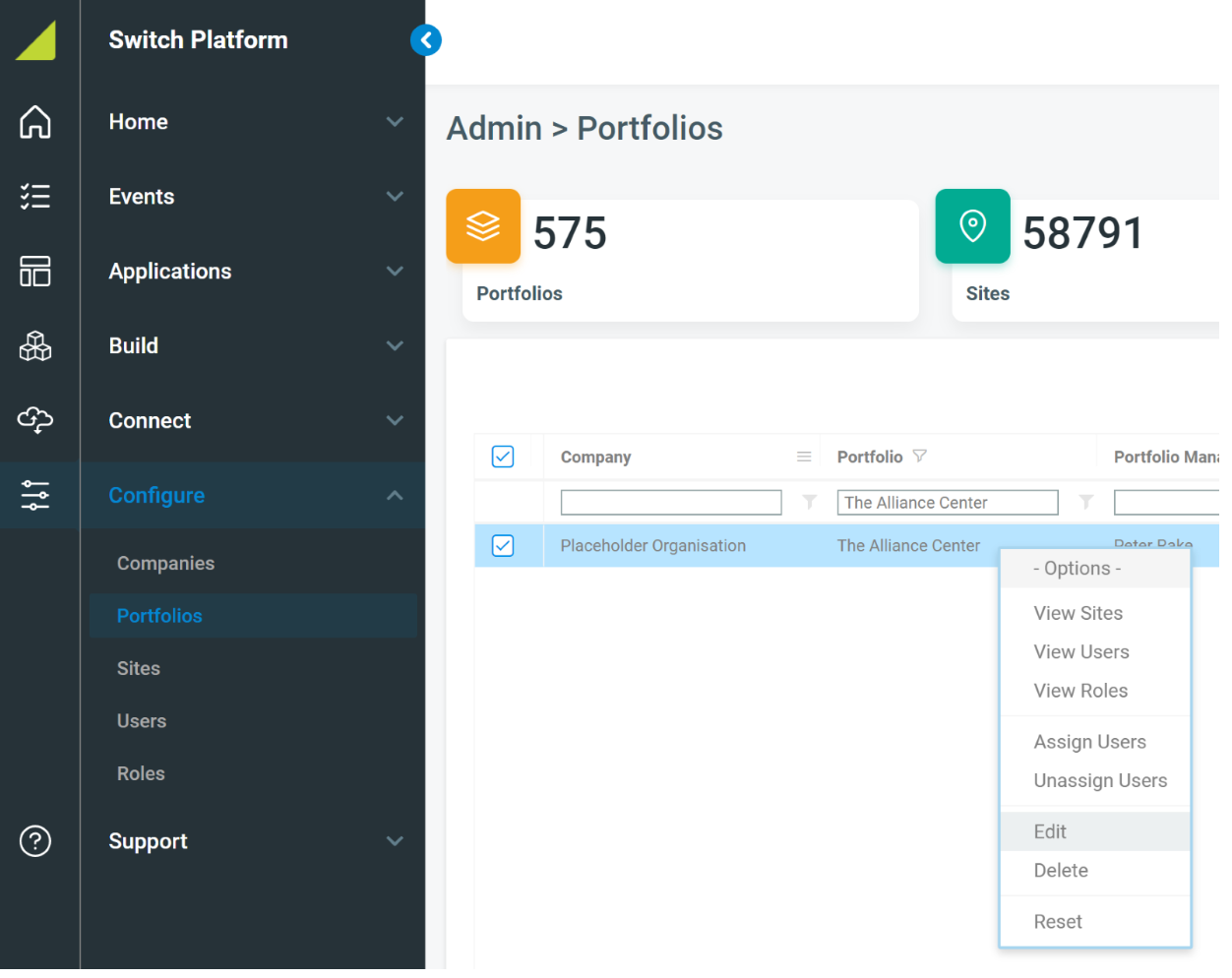

First, you need to locate the Portfolio Id (aka Api Project Id) of the portfolio that you want to interact with. You can do this by logging into the Switch Platform and navigating to Configure / Portfolios.

From Configure / Portfolios, locate the desired Portfolio from the grid then right click on the row of data for that portfolio and select Edit from the menu that appears.

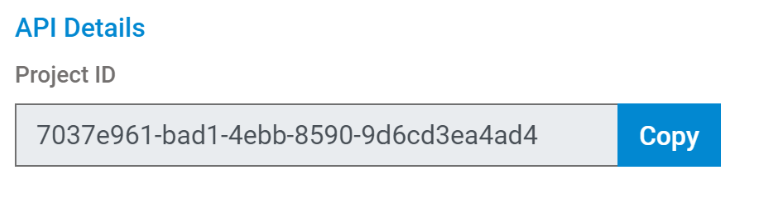

From the Edit page that appears, the Portfolio Id can be copied from the bottom left of the page as seen below:

You can then initialize the api_inputs using the api_project_id as follows:

Dataset Module

A module for interacting with Data Sets from the Switch Automation Platform. Provides the ability to list Data Sets and their folder structure as well as retrieve Data Sets with the option to pass parameters.

The simplest way to navigate through and identify Data Sets is from the Switch Platform. Once you’ve identified a Data Set that you want to access from the Switch API, you’ll need to get it’s dataset_id, which can be found by opening the Data Set in the Switch Platform and clicking the ‘Copy dataset Id’ button near the top right of the page.

The you can then request the data from the Data Set as follows:

Integration Overview

This section will discuss the overarching structure of an integration while subsequent sections will detail specific methods for achieving the integrations.

Integrations generally fall into one of two categories: Data Feed and API. Data Feed integrations involve the delivery of data through one of the supported protocols (Email, FTP, API Endpoint Upload) followed by processing and ingestion into the Switch Automation platform, and API integrations involve the request of data from an external API, which is setup to occur on a predefined scheduled, followed by processing and ingestion into the Switch Automation platform. Often, the data (e.g., Readings, Tags, Workorders, etc.) being ingested into the Switch Automation platform must be associated with existing Assets (e.g., Sites, or Sensors/Points)., requiring the request of data from the Switch Automation platform and the subsequent joining of those retrieved Assets to the externally sourced data. In some cases, new Assets need to be created so that novel data will have something to be associated with. Once the associations have been made and any further data processing is completed, then the data can be ingested into the Switch Automation platform.

Integration Module

A module for integrating data with the Switch Automation Platform. Provides the ability to create, update, and retrieve assets (i.e., devices, sensors, sites, workorders, tags, metadata); ingest and retrieve sensor readings; and ingest and retrieve data from Azure Data Explorer (ADX).

Methods for Retrieving Assets and Data:

Methods for Updating and/or Creating Assets:

Methods for Ingesting Data into Pre-defined Data Tables Natively Supported by the Switch Automation platform:

Pipeline Module

Module defining the Task types. A deployed integration or analytics process must be one of these Task types and all python code that is to be executed must be contained within one of the pre-defined methods of these Tasks. External Users of the Switch API should feel empowered to develop IntegrationTask’s and AnalyticsTask’s, but other Task types have a more complicated interaction with the Switch Automation platform and should be developed in coordination with Switch Automation’s Software Development and Data Science teams.

IntegrationTask

Base class used to create integrations between the Switch Automation Platform and other platforms, low-level services, or hardware.

Examples include:

- Pulling readings or other types of data from REST APIs

- Protocol Translators which ingest data sent to the platform via email, ftp or direct upload within platform.

AnalyticsTask

Base class used to create specific analytics functionality which may leverage existing data from the platform. Each task may add value to, or supplement, this data and write it back.

Examples include:

- Anomaly Detection

- Leaky Pipes

- Peer Tracking

DiscoverableIntegrationTask

Base class used to create integrations between the Switch Automation Platform and 3rd party APIs.Similar to the IntegrationTask, but includes a secondary method `run_discovery()` which triggers discovery of available points on the 3rd party API and upserts these records to the Switch Platform backend so that the records are available in Build / Discovery & Selection UI. These Tasks require coordination with Switch Automation’s Software Development team to ensure that the discovery functionality is supported by the Switch Platform backend.

Examples include:

- Pulling readings or other types of data from REST APIs

QueueTask

Base class used to create data pipelines that are fed via a queue.

EventWorkOrderTask

Base class is used to create work orders in 3rd party systems via tasks that are created in the Events UI of the Switch Automation Platform.

Automation Module

A module containing methods used to register, deploy, cancel, and test Tasks. Includes helper functions for retrieving details of existing Tasks and their deployments, including process history and logs, on the Switch Automation Platform.

Before deploying a Task, you must first register the Task with the Switch Automation platform. The registration process stores the Task object and it’s code for later deployment using whichever deployment method is desired.

Register Tasks, Deploy Tasks, and cancel Task Deployments:

continue

Deployment Environment

When deploying a Task within Task Insights, the following Python environment will be used to execute the Task script. If you need to utilize a Python Package that is not listed below, please contact Switch Automation to see if it can be installed on the containers running the Task scripts.

Python Version:

Python 3.9.5

Python Packages:

- azure-common 1.1.28

- azure-core 1.23.1

- azure-servicebus 7.6.0

- azure-storage-blob 12.11.0

- msal 1.17.0

- msrest 0.6.21

- numpy 1.22.3

- pandas 1.4.2

- pandas-stubs 1.2.0.38

- pandera 0.7.1

- paramiko 2.10.3

- pyodbc 4.0.32

- pysftp 0.2.9

- requests 2.27.1

- requests-oauthlib 1.3.1

- openpyxl 3.0.9

- tabulate 0.8.10

- xmltodict 0.12.0

Heading